The promise of Generative Artificial Intelligence (GenAI) seems limitless -from crafting compelling marketing copy to answering complex customer queries, but while large language models (LLMs) are impressive, they often lack the specific, up-to-date knowledge needed to truly deliver on their potential – especially in a business context. This is where a new technology, Retrieval-Augmented Generation, or RAG, steps in. This innovative approach is transforming how AI systems process and deliver information, bridging the gap between impressive language generation and real-world application.

The Problem with Generic AI

Imagine deploying a chatbot trained on a general LLM to answer customer questions about your products. While it might eloquently describe the general concept of your offerings, it could falter when faced with questions about specific features, latest updates, or stock availability. This is because LLMs are trained on massive datasets that may not include your organization’s unique information or recent updates.

The result? Inaccurate or irrelevant responses that frustrate users and erode trust in your AI implementation.

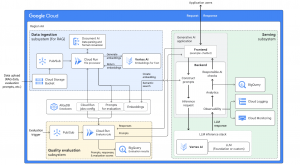

Infrastructure for a RAG-capable generative AI application using Google Cloud services

RAG: Injecting Context and Accuracy into GenAI

RAG is a technique that enhances the capabilities of Generative AI and large language models (LLMs) by combining them with external knowledge sources. Think of it as giving an AI system the ability to fact-check and update its knowledge in real time. Google’s recent Data and AI Trends 2024 report highlights the growing importance of operational data in unlocking the potential of generative AI for enterprise applications, making RAG a crucial technology for businesses looking to leverage GenAI.

How RAG Works: A Simplified View

- Knowledge Integration: All relevant data is transformed into a standardized format and stored in a searchable knowledge library.

- Vectorization: Advanced algorithms convert this information into numerical representations called vectors embeddings, enabling efficient searching and retrieval.

- Query Processing: When a user asks a question, RAG translates the query into a vector and searches the knowledge library for relevant information.

- Contextualized Response: The LLM receives both the user’s query and the retrieved contextual information, generating an accurate, specific, and up-to-date response.

Key Benefits of RAG for Your Business

Most importantly, RAG enhances the accuracy and relevance of AI-generated responses by providing users with precise answers grounded in the organization’s specific data, boosting trust and satisfaction. It also enables real-time information access, equipping AI with the latest data to ensure responses are always current and relevant—a crucial capability for dynamic fields like finance or customer service.

Moreover, RAG can lead to significant cost savings. By indexing relevant information and retrieving only the most pertinent data to answer a question, RAG allows the use of much smaller prompts. This is particularly advantageous when dealing with large datasets, such as an entire website. New models like Gemini-1.5 can handle up to 2 million tokens, but calling such a model for every simple query would be prohibitively expensive. RAG optimizes this process, making it more cost-effective.

By delivering personalized and context-aware interactions through chatbots, virtual assistants, and other GenAI-powered applications, RAG can significantly improve the customer experience. Furthermore, RAG empowers employees with access to comprehensive and contextualized information, facilitating better-informed decisions and streamlining decision-making processes across the organization.

Examples of RAG in Action

The applications for RAG are vast, offering exciting possibilities for businesses across various sectors. Here are a few examples of how RAG is bridging the gap between powerful language generation and real-world problem-solving:

- Elevated Customer Service with Smarter Chatbots: Imagine chatbots that go beyond generic responses. RAG empowers chatbots to tap into a wealth of knowledge, pulling up specific product details, troubleshooting guides, or even personalized order information. This means faster, more accurate resolutions for customers and reduced strain on human support teams.

- Data-Driven Insights from Social Listening: Social media is a goldmine of customer sentiment, but sifting through the noise can be overwhelming. RAG can supercharge social listening by not only identifying brand mentions but also analyzing the context and sentiment behind them. This allows businesses to understand customer perceptions, track campaign effectiveness, and identify emerging trends with greater precision.

- Next-Level Personalization for Loyalty Programs: Generic rewards don’t resonate with today’s discerning customers. RAG can analyze individual customer data, from purchase history to browsing behavior, to tailor loyalty program recommendations and offers. Imagine receiving a discount on a product you’ve recently viewed or being rewarded for consistent engagement with a brand you love. This level of personalization fosters stronger customer relationships and drives loyalty.

Integrating RAG into Existing Systems

While the potential of RAG is immense, integrating it into existing systems requires careful planning and execution. Businesses must consider factors such as data preprocessing, model selection, and parameter tuning to ensure optimal performance. Additionally, organizations should be prepared to address potential challenges, such as data quality issues or the need for specialized hardware to handle large-scale deployments. By working with experienced partners and allocating sufficient resources, businesses can successfully navigate these complexities and unlock the full value of RAG for their specific use cases.

Conclusion: The Future of AI is Contextual

RAG represents a significant leap forward in Generative AI, moving beyond generic language generation towards truly intelligent systems capable of understanding and responding to the nuances of your business. As RAG technology continues to evolve, we can expect to see more sophisticated applications. Future systems might not only provide information but also take actions based on that information, opening up new possibilities for automation and decision-making support.

At Making Science, we understand that the future of Generative AI lies in its ability to process and leverage information within its proper context. While RAG is still an evolving field, its potential to transform business operations is undeniable. As experts in data-driven solutions, Making Science is committed to helping our clients navigate the evolving landscape of Generative AI and unlock the power of technologies like RAG to drive business success.

Cookie configuration

Cookie configuration